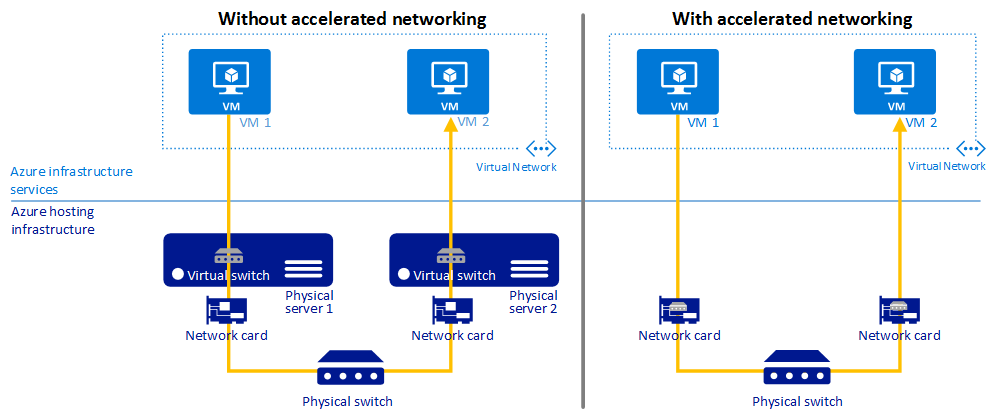

Azure has a feature called Accelerated Networking. This feature is available for specific VMs on the Azure Platform. It will create a datapath directly from VM to the Mellanox network card on Azure hosting infrastructure. This removes a virtual switch and virtual layer on the physical server. You can find more information on this link. This will greatly increase the throughput capabilities of the virtual machines.

The VM Series sizes D/DSv2 and F/FS with 2 or more vCPUs. VM Series that support hyper threading like the D/DSv3, E/ESv3, FSv2 and Ms/Mms VMs are supported with 4 or more vCPUs are supported.

Deployment

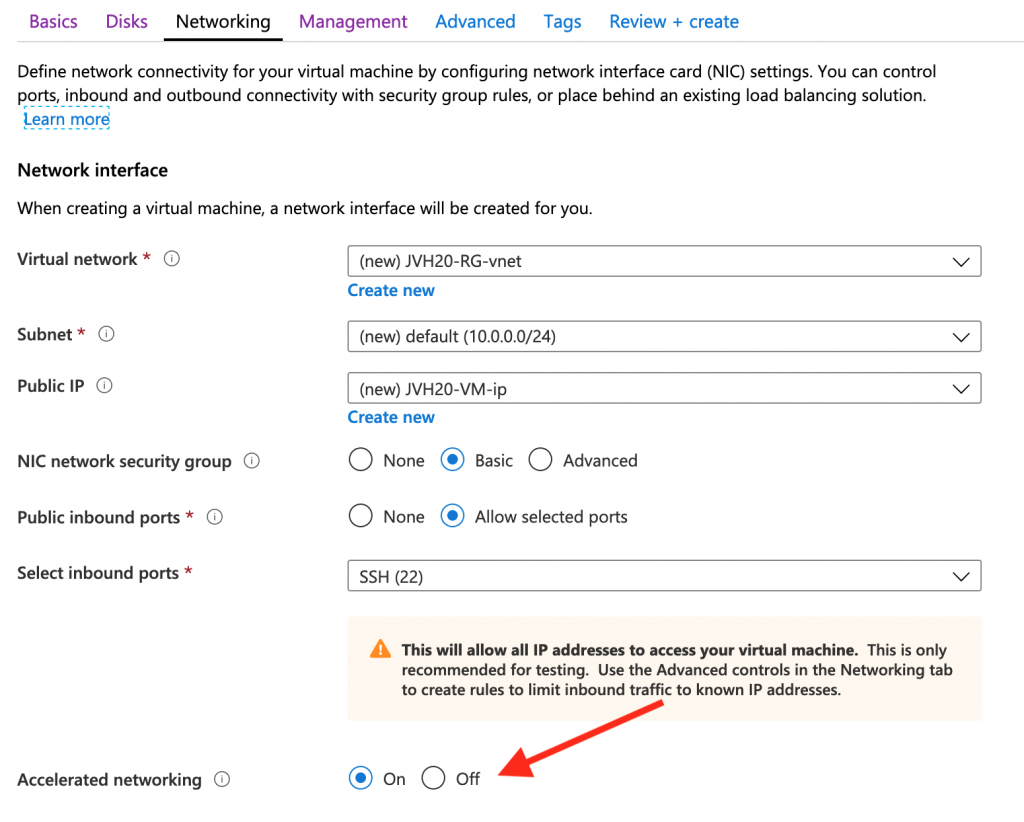

To enable you can create a NIC with Accelerated Networking during the initial creation of the VM or you can perform this after VM creation.

Azure Marketplace

In the Azure Marketplace any “Accelerated Networking supported VM will have a property to enable it during initial creation.

Azure Powershell

Via Azure Powershell you can enable “Accelerated Networking” on the NIC. A VM needs to be deallocated for this. For the whole procedure you can find more information here.

$nic = Get-AzNetworkInterface -ResourceGroupName "ResourceGroup1" -Name "NetworkInterface1"

$nic.EnableAcceleratedNetworking = 1

$nic | Set-AzNetworkInterfaceAzure CLI

Azure CLI can be used as well to enable Accelerated Networking either during creation or afterwards. The VM needs to be deallocated to enable the setting.

az network nic update --accelerated-networking true --name NetworkInterface1 --resource-group MyResourceGroup1ARM template

If you have your own ARM template to deploy your VMs you can of course enable Accelerated Networking as well. Documentation can be found here.

{

"apiVersion": "[variables('networkApiVersion')]",

"type": "Microsoft.Network/networkInterfaces",

"name": "NetworkInterface1",

"location": "westeurope",

"properties": {

"ipConfigurations": [

{

"name": "ipconfig1",

"properties": {

"privateIPAllocationMethod": "Dynamic",

"subnet": {

"id": "[variables('subnetId')]"

}

}

}

],

"enableAcceleratedNetworking": "true",

"networkSecurityGroup": {

"id": "[variables('nsgId')]"

}

}

},Terraform template

And of course you can alse have this inside of Terraform templates as in this example. More information can be found here.

resource "azurerm_network_interface" "NetworkInterface1" {

name = "NetworkInterface1"

location = "westeurope"

resource_group_name = "JVH20-RG"

enable_accelerated_networking = "true"

network_security_group_id = "${azurerm_network_security_group.nsg.id}"

ip_configuration {

name = "interface1"

subnet_id = "${azurerm_subnet.subnet.id}"

private_ip_address_allocation = "dynamic"

}

}Verification

Once deployed the question remains ‘Do we have accelerated networking enable on the VM?”. There are a couple of quick verification steps you can perform this depends also the operating system you deployed of course.

Linux

In Linux there several locations where you can verify Accelerated Networking. First of all you can check that the Mellanox driver has been loaded in the and active:

# lspci -v

0001:00:02.0 Ethernet controller: Mellanox Technologies MT27500/MT27520 Family [ConnectX-3/ConnectX-3 Pro Virtual Function]

Subsystem: Mellanox Technologies MT27500/MT27520 Family [ConnectX-3/ConnectX-3 Pro Virtual Function]

Physical Slot: 1

Flags: bus master, fast devsel, latency 0

Memory at fe0000000 (64-bit, prefetchable) [size=8M]

Capabilities: [60] Express Endpoint, MSI 00

Capabilities: [9c] MSI-X: Enable+ Count=24 Masked-

Capabilities: [40] Power Management version 0

Kernel driver in use: mlx4_core

Kernel modules: mlx4_core

If the driver is ready to go the network interface configuration can be verified. The output of the ‘ip addr’ command shows the typical eth0 interface and a virtual/pseudo interface. In this case it is called ‘enP1s1’ and in the output it mentions that the master interface is ‘eth0’.

ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0d:3a:04:4b:ec brd ff:ff:ff:ff:ff:ff

inet 10.0.0.4/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20d:3aff:fe04:4bec/64 scope link

valid_lft forever preferred_lft forever

3: enP1s1: mtu 1500 qdisc mq master eth0 state UP group default qlen 1000

link/ether 00:0d:3a:04:4b:ec brd ff:ff:ff:ff:ff:ff

Next stop is the ‘ethtool’ that will give you some final clues. First of all the speed indicates ‘40000Mb/s’.

# ethtool eth0 Settings for eth0: Supported ports: [ ] Supported link modes: Not reported Supported pause frame use: No Supports auto-negotiation: No Advertised link modes: Not reported Advertised pause frame use: No Advertised auto-negotiation: No Speed: 40000Mb/s Duplex: Full Port: Other PHYAD: 0 Transceiver: internal Auto-negotiation: off Link detected: yes

Another option in the ethtool is look activity in the the virtual function using the command: ‘ethtool -S eth0 | grep vf_’. This indicates that traffic is flowing via the virtual/pseudo network interface.

# ethtool -S eth0 | grep vf_

vf_rx_packets: 15675

vf_rx_bytes: 205105559

vf_tx_packets: 41133

vf_tx_bytes: 13694062

vf_tx_dropped: 0And a final indicator is to verify that the Mellanox driver is attached to the interupts requesting the output of /proc/interrupts.

# cat /proc/interrupts

CPU0 CPU1

1: 9 0 IO-APIC 1-edge i8042

4: 2550 97 IO-APIC 4-edge ttyS0

8: 0 0 IO-APIC 8-edge rtc0

9: 0 0 IO-APIC 9-fasteoi acpi

12: 0 3 IO-APIC 12-edge i8042

14: 0 0 IO-APIC 14-edge ata_piix

15: 435 3238 IO-APIC 15-edge ata_piix

24: 320 38951 Hyper-V PCIe MSI 134250496-edge mlx4-async@pci:0001:00:02.0

25: 163 25656 Hyper-V PCIe MSI 134250497-edge mlx4-1@0001:00:02.0

26: 0 31096 Hyper-V PCIe MSI 134250498-edge mlx4-2@0001:00:02.0

FortiGate

On the FortiGate Next-Generation Firewall it is also possible to enable Accelerated Networking on specific version (6.2.1+, 6.0.6+ and 5.6.10). Once deployed it is advised to verify that Accelerated Networking is enabled on the VM using the different debug commands on the CLI.

First of all the virtual/pseudo networking interface needs to be active.

# fnsysctl ifconfig -a lo Link encap:Local Loopback LOOPBACK MTU:16436 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0 Bytes) TX bytes:0 (0 Bytes) dummy0 Link encap:Ethernet HWaddr A2:F8:2C:72:0A:32 BROADCAST NOARP MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0 Bytes) TX bytes:0 (0 Bytes) port1 Link encap:Ethernet HWaddr 00:0D:3A:2C:5F:16 inet addr:172.16.136.5 Bcast:172.16.136.255 Mask:255.255.255.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:2652 errors:0 dropped:0 overruns:0 frame:0 TX packets:280 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:767281 (749.3 KB) TX bytes:61114 (59.7 KB) port2 Link encap:Ethernet HWaddr 00:0D:3A:2D:5D:57 inet addr:172.16.137.5 Bcast:172.16.137.255 Mask:255.255.255.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:107 errors:0 dropped:0 overruns:0 frame:0 TX packets:29 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:4494 (4.4 KB) TX bytes:22938 (22.4 KB) root Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:796 errors:0 dropped:0 overruns:0 frame:0 TX packets:796 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:212916 (207.9 KB) TX bytes:212916 (207.9 KB) ssl.root Link encap:Unknown UP POINTOPOINT RUNNING NOARP MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:2 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0 Bytes) TX bytes:0 (0 Bytes) JVH55-1 Link encap:Unknown inet addr:169.254.121.1 Mask:255.255.255.255 UP POINTOPOINT NOARP MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:3 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0 Bytes) TX bytes:0 (0 Bytes) JVH55-2 Link encap:Unknown inet addr:169.254.121.1 Mask:255.255.255.255 UP POINTOPOINT NOARP MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:3 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0 Bytes) TX bytes:0 (0 Bytes) vsys_ha Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0 Bytes) TX bytes:0 (0 Bytes) port_ha Link encap:Ethernet HWaddr 00:40:F4:70:84:D2 UP BROADCAST MULTICAST MTU:1496 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:1 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:0 (0 Bytes) TX bytes:0 (0 Bytes) vsys_fgfm Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0 Bytes) TX bytes:0 (0 Bytes) sriovslv0 Link encap:Ethernet HWaddr 00:0D:3A:2D:5D:57 UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:1931 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:0 (0 Bytes) TX bytes:1764473 (1.7 MB) sriovslv1 Link encap:Ethernet HWaddr 00:0D:3A:2C:5F:16 UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1 RX packets:7616 errors:0 dropped:0 overruns:0 frame:0 TX packets:13050 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:5813421 (5.5 MB) TX bytes:2628354 (2.5 MB)

Secondly the network interface device information should show the correct speed as in linux.

# diag hardware deviceinfo nic port1 Name: port1 Driver: hv_netvsc Version: Bus: Hwaddr: 00:0d:3a:2c:5f:16 Permanent Hwaddr:00:0d:3a:2c:5f:16 State: up Link: up Mtu: 1500 Supported: Advertised: Speed: 40000full Auto: disabled RX Ring: 10486 TX Ring: 192 Rx packets: 2666 Rx bytes: 772077 Rx compressed: 0 Rx dropped: 0 Rx errors: 0 Rx Length err: 0 Rx Buf overflow: 0 Rx Crc err: 0 Rx Frame err: 0 Rx Fifo overrun: 0 Rx Missed packets: 0 Tx packets: 280 Tx bytes: 61114 Tx compressed: 0 Tx dropped: 0 Tx errors: 0 Tx Aborted err: 0 Tx Carrier err: 0 Tx Fifo overrun: 0 Tx Heartbeat err: 0 Tx Window err: 0 Multicasts: 0 Collisions: 0

And finally the interrupts on the unit needs to be attached to the Accelerated Networking Mellanox driver .

# diagnose hardware sysinfo interrupts CPU0 CPU1 CPU2 CPU3 0: 48 0 0 2 IO-APIC-edge timer 1: 0 0 0 8 IO-APIC-edge i8042 4: 5 1 3 3 IO-APIC-edge serial 8: 0 0 0 0 IO-APIC-edge rtc 9: 0 0 0 0 IO-APIC-fasteoi acpi 12: 0 0 0 3 IO-APIC-edge i8042 14: 0 0 0 0 IO-APIC-edge ata_piix 15: 0 0 0 0 IO-APIC-edge ata_piix 40: 14887 0 0 0 PCI-MSI-edge mlx4-async@pci:0002:00:02.0 41: 0 453 0 0 PCI-MSI-edge sriovslv0-0 42: 0 0 89 0 PCI-MSI-edge sriovslv0-1 43: 0 0 0 695 PCI-MSI-edge sriovslv0-2 44: 635 0 0 0 PCI-MSI-edge sriovslv0-3 45: 0 14897 0 0 PCI-MSI-edge mlx4-async@pci:0001:00:02.0 46: 0 0 3553 0 PCI-MSI-edge sriovslv1-0 47: 0 0 0 2474 PCI-MSI-edge sriovslv1-1 48: 3997 0 0 0 PCI-MSI-edge sriovslv1-2 49: 0 3273 0 0 PCI-MSI-edge sriovslv1-3 NMI: 0 0 0 0 Non-maskable interrupts LOC: 420708 407948 409165 416109 Local timer interrupts SPU: 0 0 0 0 Spurious interrupts PMI: 0 0 0 0 Performance monitoring interrupts IWI: 0 0 0 0 IRQ work interrupts RES: 7425 2919 2856 3375 Rescheduling interrupts CAL: 131 134 214 233 Function call interrupts TLB: 57 39 61 53 TLB shootdowns ERR: 0 MIS: 0

Be First to Comment